Agnostic Testing - Do you believe?

For a while now, the concept of ‘release’ has seemed perfunctory to me. I remember the first moment when the thought hit me. It was over 10 years ago and agile was gaining traction as the method of software delivery. We were releasing to a two-week cadence As the test manager, part of my role was to drive the testing strategy for each release. As each release came and went, we stressed over how to make our testing as valuable as possible in the short time we had. As each release came and went we inevitably lost time and had too much testing to perform in too little time. As each release came and went, we were left stressed and depleted and with the knowledge that in two weeks we would need to repeat the exercise. It seemed crazy. It was at that point I ceased to think of release testing and start focusing on enabling quality testing to happen regardless of release.

The cementing of continuous delivery as a preferred method of deployment has further entrenched this idea in my mind as we aim to release multiple times a day. Now instead of one release, we have many small releases. In addition, we contend with increased dependency on external software which itself has its own release cadence. So when we say release, the release of what exactly? The infrastructure software, the database software, the automation software…. the product?

Yet we still focus on pre-release product testing. We improve our testing tools, test environments, and testing processes to facilitate greater and faster feedback prior to release. To be fair, we are starting to focus more on recoverability over perfection and testing in the wild. [Late addition link by charity majors]. But,I’m still seeing a lot of emphasis on exhaustive pre-release testing.

Putting aside the philosophical and financial considerations of exhaustive testing, I wonder if this fixation on testing everything is blinding us what we really need our software testing for. We have become so focused improving our pre-release testing through better tooling that we are forgetting to ask the question, where is the risk?

The risk is often not where we are looking. The history of black swans tells us this. In my mind, our risk is less related to each release and more related to how those releases behave over time. Time. The one factor we don’t afford could be the one factor that will catch us out.

What if we removed the concept of ‘release’ from our testing vocabulary? Instead of testing pre and post-release, we perform testing regardless of release. It becomes agnostic to release.

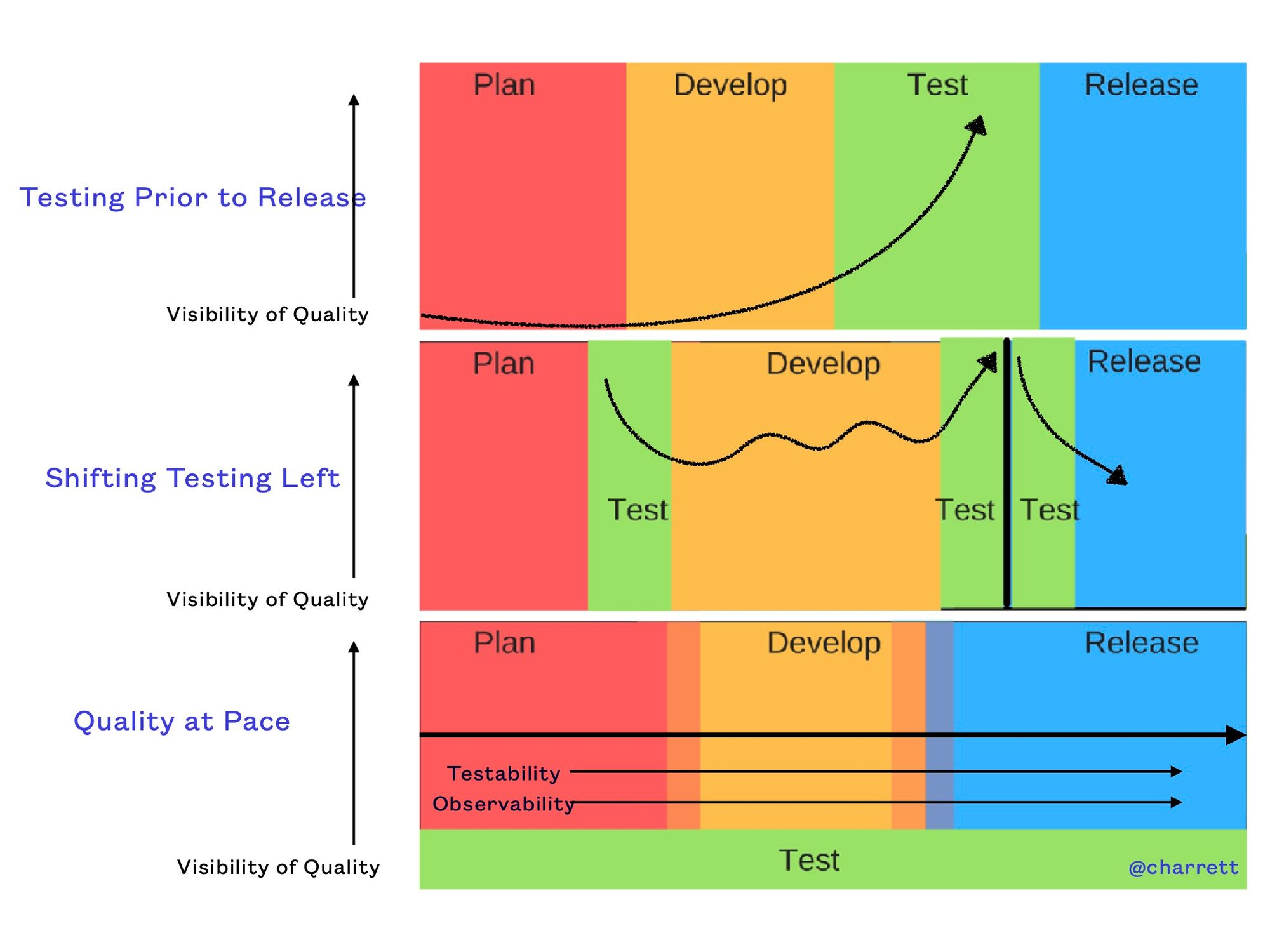

perhaps we could have something like this:

Testing with this mindset frees up thinking. It breaks the chains and limitations of time, so often a constraint to finding real value in testing. It allows exploration and understanding of the system in ways that we did not have the luxury of performing. We no longer have to test in 3 days or 2 hours instead we perform considered and valuable tests that focus on risks we have yet to fully understand.

Contemporary Risks in Continuous Deployment

Below are some questions that may help think about potential risks outside of your releases:

How well is our ability to recover from a failed release, corrupted data, integration failure, downtime in one part of your system?

How well do we test our release process? Do we architect our feature flags?

How reliant on we on external software ‘just working’ – what if there was a failure how would we cope?

How dependent are we on serverless or cloud-based architecture? Is that bad?

How do we know our ecosystem is healthy? Can we detect symptoms prior to catastrophic events?

How do we identify risk in our software when we release multiple times in a day? Is there a better way?

Do we have too little/much data to identify potential problems? Can we see these problems easily?

What oracles can we use to help us detect health over time? What (new) oracles do we need?

Are there other oracles within our organization we can use to help identify potential problems?

What rich datasets can we create to expose potential problems?

What security and performance tests can we run outside of the releases?

Final words on Agnostic Testing

This doesn’t mean we forget about pre-release testing at all. But it might be time to reconsider our strategy and perhaps our over focus on functionality at the cost of some other quality attributes that might really impact us in a nightmarish black swan moment.

Finally…

Those are only some questions to ask.

I bet many of you have additional questions. What do you think? Can add to the conversation? You might be helping someone out!

Comments ()